Brief Reviews on Quantum Machine Learning

This review is written by non-expert and used for personal study only.

Quantum machine learning (QML) represents an exciting frontier where the immense processing power of quantum computing intersects with the predictive capabilities of machine learning. This paradigm shift promises to redefine data analytics and unlock complex patterns in data, which have remained elusive until now.

Quantum Algorithm and Quantum Machine Learning

For hundreds of years, humans have exhibited remarkable prowess in discerning patterns from vast amounts of data, with the groundwork of mathematical techniques originating from astronomical data analysis. With the advent of digital computers, this capacity evolved into automated data analysis techniques, leading to the establishment of linear algebraic data analysis methodologies, and sophisticated learning methods such as deep learning networks. These advancements have equipped us to identify complex and nuanced patterns from massive data troves.

Quantum algorithms have shown remarkable potential to outpace classical algorithms in specific problem-solving contexts—a phenomenon referred to as quantum speedup. This capacity to outstrip classical algorithms is reminiscent of Shor’s polynomial-time quantum algorithm for integer factorization. However, accurately benchmarking the upper limits of classical algorithms remains a task of great complexity.

Identifying the superiority of quantum machine learning over classical methods necessitates a quantum computer and revolves around a benchmarking conundrum. Standardized measures from complexity theory, like query and gate complexity, serve as parameters to quantify quantum speedups in machine learning. However, ascertaining the resource requirements for executing both classical and quantum machine learning algorithms poses a significant challenge, necessitating intricate numerical experimentation.

Quantum algorithms integrated into machine learning applications demonstrate quantum speedups, potentially eclipsing classical computing in machine learning tasks. These quantum speedups extend to a wide array of data analysis and machine learning algorithms via quantum basic linear algebra subroutines (qBLAS).

Data Analysis

Quantum computers could potentially identify patterns within data that classical computers might overlook. They offer the dual advantage of being capable of analyzing both classical and quantum data, thus broadening the scope of data analysis.

Classical machine learning encompasses both supervised and unsupervised learning techniques for data analytics. While the former leverages labeled training data, the latter identifies inherent categories in unlabeled data, often employing a combination of both methods. Quantum computers amplify these capabilities, performing linear algebraic operations at a scale exponentially higher than classical computers.

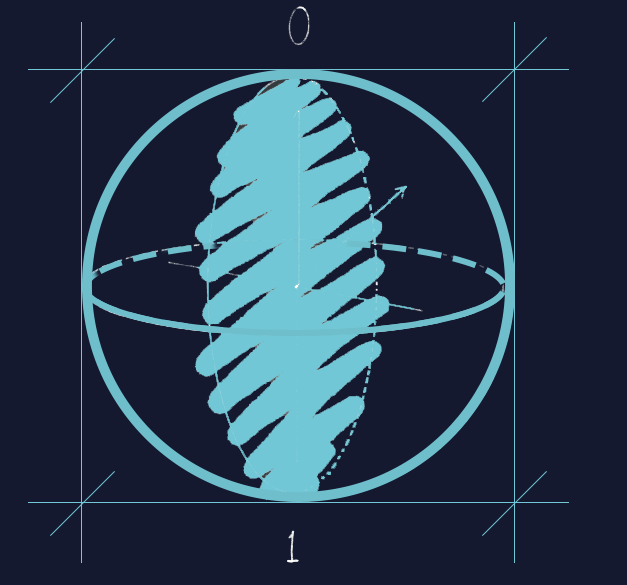

Linear-algebra-based quantum machine learning utilizes matrix operations on high-dimensional vector spaces for data analysis. Techniques such as Principal Component Analysis (PCA) summarize correlations between different data components, while its quantum counterpart—Quantum Principal Component Analysis (QPCA)—employs quantum algorithms to dissect data vectors into principal components and eigenvalues.

Quantum Support Vector Machines—another variant of quantum machine learning algorithms—leverage quantum computing to construct the optimal separating hyperplane between data classes, with successful applications in tasks like handwritten digit recognition. These methods can resolve optimization problems using D-Wave processors (in this paper) and the HHL matrix inversion algorithm, offering an exponential speedup over classical algorithms.

Despite these promising developments, quantum machine learning also faces significant challenges. Converting classical data into quantum states and outputting quantum states into classical data often results in operational slowdowns in certain algorithms, such as HHL and Quantum Support Vector Machines.

Developing deep quantum learning methods using special-purpose quantum information processors like quantum annealers and programmable photonic circuits—which are easier to construct and scale up than general-purpose quantum computers—represent a viable solution. This approach allows for the quantization of a Boltzmann machine by expressing it as a set of interacting quantum spins, thereby facilitating deep quantum learning protocols.

Direct application of quantum machine learning to quantum data unveils underlying features and patterns more efficiently than classical analysis. Quantum simulators offer an efficient way to understand the dynamics of unknown quantum systems using approximate Bayesian inference. Furthermore, quantum coherence can lower the number of samples required to learn the desired task, reducing access requests to training data.

However, implementing coherent input states presents a significant technical hurdle, despite the potential for exponential speedups in device characterization, making quantum machine learning a promising prospect in the foreseeable future.

Tuning quantum gates for error correction remains a complex task in quantum computation. However, heuristic search methods, genetic algorithms, and stochastic gradient descent have been successful in curbing digital and experimental errors in quantum gates. Learning methods have also proven effective in developing control sequences to optimize adaptive quantum metrology.

Classical machine learning methods, such as neural networks, have been employed to study phase-of-matter detection and ground-state search in condensed matter, achieving superior performance compared to established numerical tools. Small quantum computers and special-purpose quantum simulators also show promising potential for application in machine learning and data analysis.

Despite these advancements, quantum hardware remains a bottleneck. While enabling technologies for quantum computing are progressing rapidly, we still face challenges in improving connectivity, constructing interface devices, and determining the true number of gates required. Addressing four key challenges—the input problem, the output problem, the costing problem, and the benchmarking problem—will pave the way for the wider application of quantum algorithms.

Reference

- Biamonte, J. et al. Quantum Machine Learning. Nature 549, 195–202 (2017).

Leave a comment